TODAY, my this post is " HOW TO EXTRACT LINKS OR URLs WITHIN A WEBPAGE OR WEBSITE , EVEN ONLINE "

1. WHY I NEED SUCH ONLINE Tools >>

~ using such tools i can find links within webpage , even without downloading the webpage.

~ if i don't have access to that webpage, because may be that website had been blocked by administrator.

~ Hence i can use such online tools as a proxy website, which offers links what you in need.

I WILL DISCUSS LATER HOW THIS TOOLS CAN BE USED AS A PROXY SITE.

2. HERE IS THE LIST OF SUCH TOOLS >> ONLINE

<<1>>

http://www.webmaster-toolkit.com/link-checker.shtml

~ this tool will only check the first 60 links on a page.

~ SUPPORTED Type of Link { HREFs/SRCs }.

<<2>>

http://www.webtoolhub.com/tn561364-link-extractor.aspx

~ able to display All links within a webpage.

~ Display Categorized List of URLs

~ Convert Relative URLs to Absolute URLs

~ Show general attributes like: 'title', 'anchor text' or 'alt' tag etc.

<<3>>

http://www.iwebtool.com/link_extractor

~ this tool has a limitation about made requests within one hour.

3. HERE IS THE LIST OF SUCH TOOLS >> OFFLINE

<<1>>

http://code.google.com/p/websitelinkextractor/

~ Website Link Extractor is a small utility which can be used to extract URLs from any web page. Link Extractor requires .Net Framework 3.5 to be installed to run.

~ No installation is required, just click the executable to run.

~ Export to Excel or CSV

FOR ONLINE VERSION LINKS

http://util.thetechhub.com/online-link-extractor.aspx

<<2>>

http://www.seoclick.com/tools/website-links-extractor/

<<3>>

http://www.nirsoft.net/utils/addrview.html

~ You can save the extracted addresses list to text, HTML or XML file.

~ WORKS without installation.

~ allows to extract urls within offline *.html file or a web address.

download-

http://www.nirsoft.net/utils/addrview.zip

<<4>>

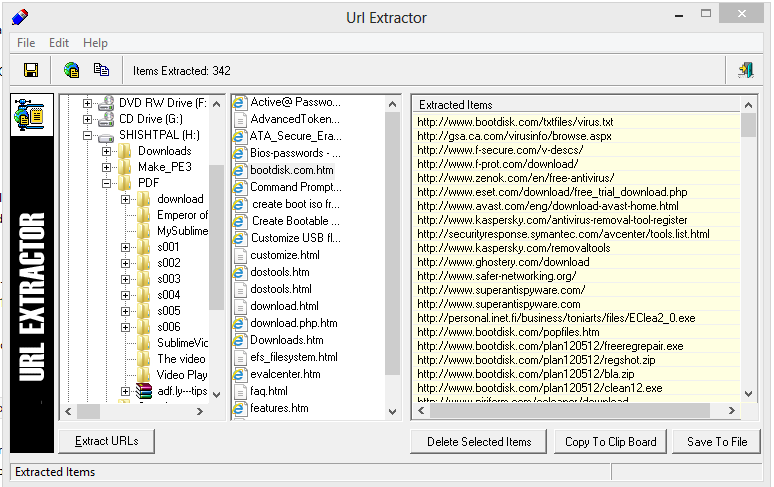

http://www.focalmedia.net/urlextract.html

~ Save web pages onto your hard drive and then extract the Url's using Url Extractor.

~ This program will extract Url's from files on your local drives and save them to standard text files.

<<5>>http://www.spadixbd.com/extracturl/index.htm

~ Extract URL with title, description, keywords meta data from entire websites, list of URLs or search engine results.

~ NOT A FREE PROGRAM, IN TRIAL VERSION YOU CAN SAVE ONLY UP TO 5 URLs.

1. WHY I NEED SUCH ONLINE Tools >>

~ using such tools i can find links within webpage , even without downloading the webpage.

~ if i don't have access to that webpage, because may be that website had been blocked by administrator.

~ Hence i can use such online tools as a proxy website, which offers links what you in need.

I WILL DISCUSS LATER HOW THIS TOOLS CAN BE USED AS A PROXY SITE.

2. HERE IS THE LIST OF SUCH TOOLS >> ONLINE

<<1>>

http://www.webmaster-toolkit.com/link-checker.shtml

~ this tool will only check the first 60 links on a page.

~ SUPPORTED Type of Link { HREFs/SRCs }.

<<2>>

http://www.webtoolhub.com/tn561364-link-extractor.aspx

~ able to display All links within a webpage.

~ Display Categorized List of URLs

~ Convert Relative URLs to Absolute URLs

~ Show general attributes like: 'title', 'anchor text' or 'alt' tag etc.

<<3>>

http://www.iwebtool.com/link_extractor

~ this tool has a limitation about made requests within one hour.

3. HERE IS THE LIST OF SUCH TOOLS >> OFFLINE

<<1>>

http://code.google.com/p/websitelinkextractor/

~ Website Link Extractor is a small utility which can be used to extract URLs from any web page. Link Extractor requires .Net Framework 3.5 to be installed to run.

~ No installation is required, just click the executable to run.

~ Export to Excel or CSV

FOR ONLINE VERSION LINKS

http://util.thetechhub.com/online-link-extractor.aspx

<<2>>

http://www.seoclick.com/tools/website-links-extractor/

<<3>>

http://www.nirsoft.net/utils/addrview.html

~ You can save the extracted addresses list to text, HTML or XML file.

~ WORKS without installation.

~ allows to extract urls within offline *.html file or a web address.

download-

http://www.nirsoft.net/utils/addrview.zip

<<4>>

http://www.focalmedia.net/urlextract.html

~ Save web pages onto your hard drive and then extract the Url's using Url Extractor.

~ This program will extract Url's from files on your local drives and save them to standard text files.

<<5>>http://www.spadixbd.com/extracturl/index.htm

~ Extract URL with title, description, keywords meta data from entire websites, list of URLs or search engine results.

~ NOT A FREE PROGRAM, IN TRIAL VERSION YOU CAN SAVE ONLY UP TO 5 URLs.